Exploring the link between synthetic data quality, model design, and enterprise epistemology.

Exploring the link between synthetic data quality, model design, and enterprise epistemology.

December 8, 2025

•

Read time

For too long, we have been archivists of the past. Our data infrastructures, no matter how modern, are largely designed to record, store, and query events that have already happened. This historical record is vital, but it is also a cage. It limits our strategic vision to the corridors of what has already been, preventing us from building a true understanding of what could be. The emergence of high-fidelity synthetic data is not just another technical tool. It is a fundamental shift that forces us to renegotiate the very boundary between knowledge and simulation.

Traditional data is observational. A customer clicks a button, a machine records a temperature, a transaction clears. Our knowledge is derived from this evidence. We analyze these facts to find patterns and make inferences. This is a reactive stance.

Synthetic data is premised on a different kind of knowledge. It is generative. It does not record an event; it embodies a model of a system. To create useful synthetic financial transactions, you must encode the rules of fraud, the patterns of legitimate behavior, and the market mechanics. The resulting data is not a fact in itself, but a manifestation of your understanding. The quality of the data is a direct reflection of the depth and accuracy of that understanding. This moves us from asking "What do the data tell us happened?" to a more challenging and powerful question: "What do our models tell us can happen?"

This transition demands a different architectural discipline. Many organizations are mired in data chaos, struggling to build clean data lakes or warehouses. The next step is to build a reality lab.

A reality lab is not a repository. It is a generative system. It requires a rigorous, methodical approach to defining the parameters, relationships, and stochastic processes that define your business domain. This is where deep software and data engineering expertise becomes paramount. It is not about generating random data. It is about building a digital twin of your customer journey, your supply chain, or your manufacturing process that is robust enough to produce valid, insightful data. The focus shifts from data cleansing to model validation. The most significant cost is no longer storage; it is the intellectual rigor of crafting a faithful simulation.

This power introduces a profound responsibility. The central risk of synthetic data is the creation of a convincing fantasy. A poorly conceived model will generate data that looks plausible but reinforces biases, overlooks critical edge cases, or misrepresents fundamental dynamics. The model's flaws become a self fulfilling prophecy, leading to strategies that are perfectly optimized for a world that does not exist.

Therefore, the core challenge is not technical but epistemic. How do we know what we know is true? With traditional data, we audit the data collection process. With synthetic data, we must audit the model itself. This requires a level of discipline often missing from data projects. It demands clear success indicators for the simulation, not just the analytics run on it. We must be able to articulate the boundaries of the simulation and have the humility to recognize where our model of reality is incomplete.

Synthetic data is the ultimate tool for breaking free from operational inefficiency and strategic myopia. It allows leaders to move beyond optimizing the last quarter and start stress testing the next decade. You can simulate the impact of a new market entry, probe the resilience of your logistics network against unprecedented disruption, or train a critical machine learning model without ever compromising a single real customer record.

This is not a future possibility. It is a present-day discipline. The organizations that will thrive are those that stop seeing data as a passive asset to be mined and start treating it as an active medium to be sculpted. They will invest not only in the platforms to store data but in the expertise to build these generative models with precision and intellectual honesty. They will understand that in the modern enterprise, the most valuable data may not come from the world you operate in, but from the world you have the courage and discipline to simulate.

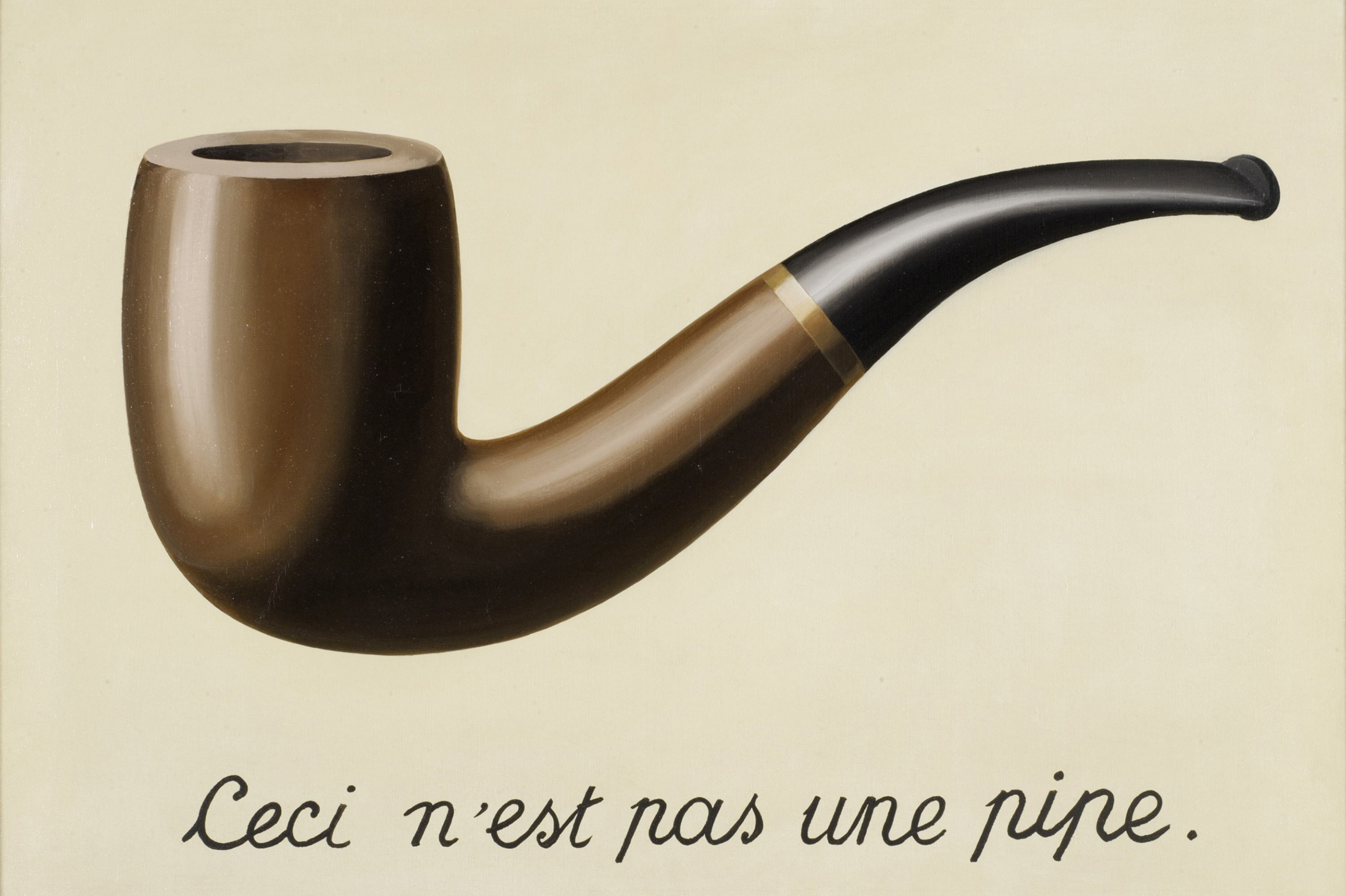

About the Art

Magritte’s The Treachery of Images (1929) feels right for this article, because it captures a tension I keep returning to in my work: the moment when something looks precise enough to trust, yet still isn’t the thing itself. The painting forces you to sit inside the space between fidelity and truth. Synthetic data lives there too. A simulation can resemble reality so convincingly that you forget the world behind it is constructed. This piece holds that discomfort in a quiet, disciplined way... a reminder that resemblance is a starting point, not a guarantee of understanding.

Source: https://historia-arte.com/obras/la-traicion-de-las-imagenes

The core ideas that keep data, models, and pipelines from drifting.

A practical guide to understanding flow, dependencies, and where things break.

Simple patterns that keep teams moving without friction.