How reasonable choices lead to fragile systems.

How reasonable choices lead to fragile systems.

July 17, 2025

•

Read time

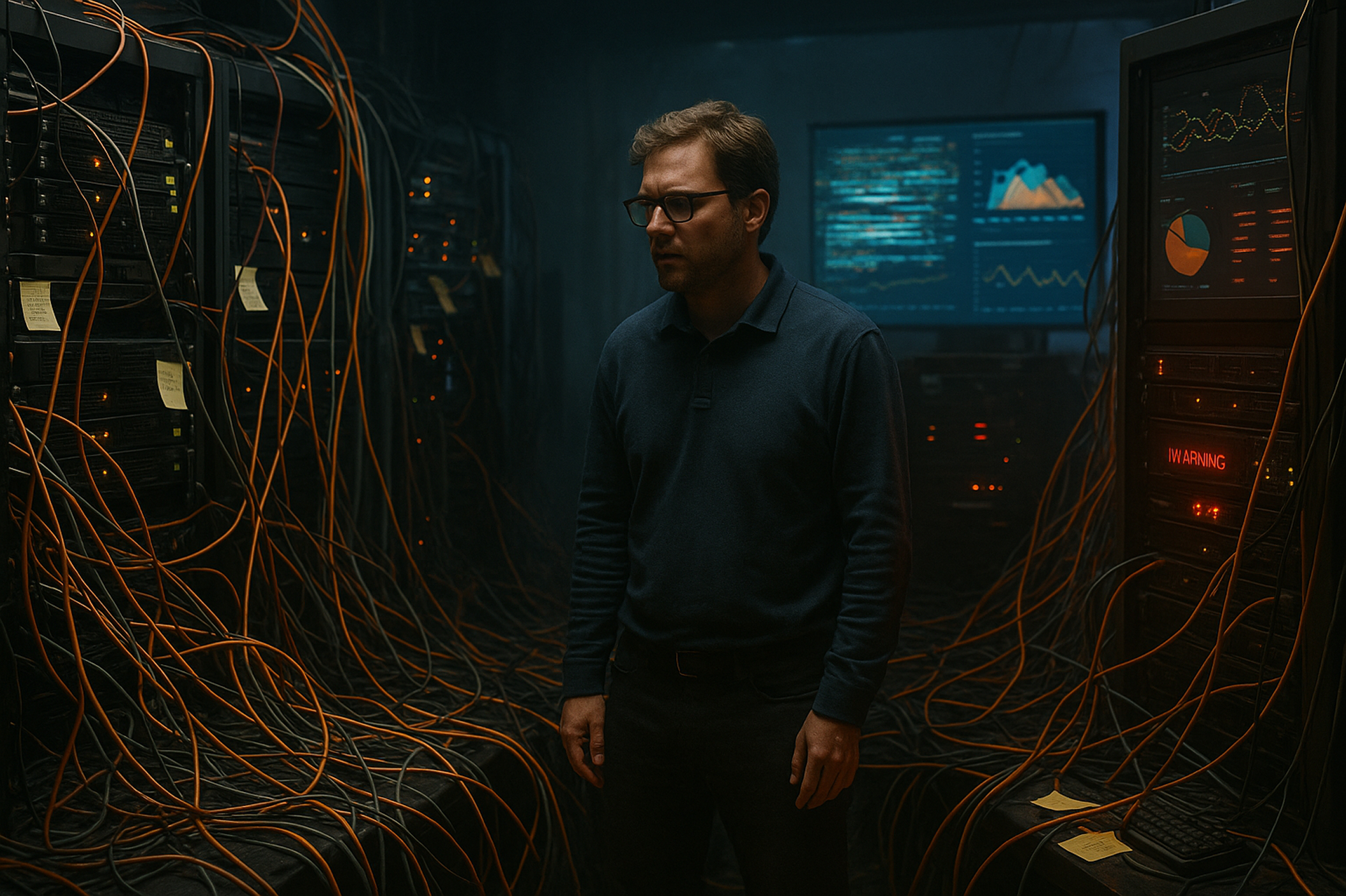

Data systems rarely fail suddenly. More often, they degrade gradually through a series of seemingly reasonable compromises. What begins as a straightforward architecture designed with clear principles inevitably faces the pressures of real-world demands. A sales team needs a custom report by tomorrow. A new compliance requirement demands immediate tracking. An executive requests a dashboard visualizing metrics in a novel way.

Each request appears reasonable in isolation, but collectively they initiate a dangerous pattern. Teams begin bypassing established data governance protocols in the name of urgency. Developers implement quick fixes that circumvent proper pipeline architecture. New tools get introduced without proper evaluation of how they integrate with existing systems. Over time, these exceptions become the rule, and the architecture loses its original coherence.

The most pernicious aspect of this decay is how invisible it remains until critical functionality breaks. Teams adapt to growing complexity by developing institutional knowledge about which systems to trust (and which to avoid), which reports require manual validation, and which pipelines are prone to failure. This adaptation masks the problem, allowing inefficiency to become normalized.

Technical debt in data systems compounds in ways that differ fundamentally from other software domains. Each new component doesn't just add its own maintenance burden, it exponentially increases the integration surface area. Consider a typical scenario:

A marketing team adopts a new analytics platform without central oversight. This creates immediate needs for data extracts from the core warehouse, new transformation logic to align metrics with existing definitions, and custom connectors to operational systems. The platform's data models diverge from organizational standards, requiring reconciliation logic. Soon, other departments request access to this data, spawning new pipelines and reports.

Within months, what began as a single tool has spawned dozens of dependencies. Questions about data consistency arise, but no one fully understands all the touchpoints. Changes to core systems start breaking downstream reports no one knew were connected. The organization finds itself trapped in a cycle where adding new capabilities makes the entire system more fragile.

The consequences extend far beyond technical challenges. As complexity grows, it reshapes how teams work and make decisions:

Cognitive load increases dramatically as engineers must now understand multiple overlapping systems to implement even simple changes. The mental model of how data flows through the organization becomes impossibly convoluted, leading to hesitation and risk aversion.

Decision velocity slows as stakeholders lose confidence in data quality. Meetings that should focus on business strategy devolve into debates about which numbers to trust. Leaders delay critical choices while waiting for "one source of truth" that never materializes.

Talent retention suffers as skilled engineers grow frustrated maintaining brittle systems. The most capable team members, who could be driving innovation, instead spend their time firefighting and reconciling data discrepancies.

Innovation stagnates because the cost of implementing new features becomes prohibitive. Teams learn to avoid ambitious projects knowing they'll likely break some critical but poorly understood dependency.

Reversing this decline requires both technical and organizational changes. The solution begins with acknowledging that all complex systems tend toward disorder unless actively maintained. Several key principles can guide the restoration effort:

First, establish clear architectural governance with teeth. This doesn't mean bureaucratic approval processes, but rather well-defined standards for how components should interact and clear ownership of integration points. Effective governance balances flexibility with just enough structure to prevent fragmentation.

Second, implement a rigorous deprecation discipline. For every new tool or pipeline added, identify an existing one to remove. Maintain an architectural runway by continuously retiring outdated components before they become legacy systems. This requires cultural commitment to viewing system simplification as equally important as feature development.

Third, invest in comprehensive observability. Complex systems can remain manageable if their behavior is transparent. Implement lineage tracking that shows data flows across all systems, not just within individual platforms. Build monitoring that alerts teams to inconsistencies before they affect decision-making.

Fourth, prioritize conceptual integrity in design. Resist the temptation to implement special-case solutions, no matter how urgent they appear. Each exception creates future complexity that will cost far more than the immediate inconvenience of doing things properly.

Organizations that master this discipline gain significant advantages. Their teams spend less time maintaining systems and more time creating value. Decision-makers act with confidence because they trust their data. New capabilities can be added quickly because the foundation remains clean.

Perhaps most importantly, these organizations avoid the innovation paradox that traps so many others, where the very systems meant to provide competitive advantage instead become anchors dragging down progress. In an era where data-driven insight separates market leaders from laggards, architectural coherence, more than technical excellence, is business strategy.

The path forward requires recognizing that data systems, like urban infrastructure, need continuous care and intentional design. Left unattended, they inevitably decay. But with disciplined stewardship, they can remain powerful enablers of growth and insight for years to come.

Why small ingestion errors turn into downstream incidents if you don’t test them at the source.

How the hidden weight of governance burnout shapes risk culture and alignment.

The shift from manual audits to reflexive architecture. How to build systems that monitor and correct their own compliance policies.