Building explainable human–AI decision systems that balance judgment, trust, and accountability.

Building explainable human–AI decision systems that balance judgment, trust, and accountability.

December 8, 2025

•

Read time

We have entered the era of the composite system, where artificial intelligence and human expertise are woven together into a single decision-making fabric. The grand challenge is all about managing the inevitable, and necessary, moments of disagreement between the human and the machine. Most system designs treat this disagreement as a failure mode, an exception to be logged and forgotten. This is a profound and costly mistake. I believe the mechanism for resolving this disagreement is what I term the Arbitration Interface as the most critical and neglected component in modern system design. Getting it right is what separates a clever prototype from a robust, enterprise-grade asset.

The common refrain is to demand "explainability," to crack open the black box and show the user why the model reached its conclusion. This is a worthy goal, but it is insufficient. A technical explanation of feature weights or activation paths is often just another form of data clutter for a human decision-maker. It provides rationale, but not necessarily reason. The real task is not to explain the model's internals, but to justify its output in a way that enables a human to exercise their judgment effectively. We must move beyond explainability and towards justifiability.

A justifiable output provides the evidence a human needs to perform their own sense-making. Think of it as building a legal case rather than publishing a scientific paper.

An effective Arbitration Interface presents the primary recommendation alongside curated, contextual evidence. This is not a raw data dump. It is the presentation of the two or three most salient data points that most heavily influenced the model's verdict. For a fraud detection system, this means showing the specific transactions that were anomalous and how they compare to the user's established behavioral baseline. For a diagnostic tool, it means highlighting the key indicators that correlate with the prognosis, not just the final probability score.

This approach reframes the AI from an oracle to an expert witness. The system is not issuing a command, but rather presenting a reasoned argument. This shift is fundamental as it respects the human's role as the judge and invites them into a collaborative process. The interface must be designed to answer the user's immediate, unspoken question: "What does the system see that I do not, and is that evidence compelling to me?"

A moment of disagreement is a precious source of truth, a labeled data point of immense value. Most organizations squander it. Capturing the human's override is a start, but it is the bare minimum. The critical design question is: how do we capture the reasoning behind the override?

A simple accept/reject button is a data dead end. We must structure the override process to be a structured data collection event. When a user rejects the AI's recommendation, the interface should require them to select from a set of justified reasons. These are not free-text comments, but predefined labels that map to specific failure modes or contextual nuances the model may be missing. Options might include "Business context invalidates alert," "Historical precedent for this exception," or "Insufficient evidence to act."

This disciplined approach transforms a subjective opinion into a quantifiable signal. It creates a rich, labeled dataset of the model's blind spots and the organization's unique edge cases. This dataset becomes the primary fuel for the next cycle of model refinement. This is not a simple feedback loop, it is a judgment feedback loop, where human expertise directly tutors the artificial intelligence on the nuances it lacks.

Neglecting the Arbitration Interface is a strategic error with compounding costs. Without a designed system for arbitration, you create one of two dysfunctional outcomes. The first is automation distrust, where users, lacking clarity or justification, simply ignore the AI's recommendations, rendering your investment inert. The second is automation bias, where users, fatigued by a poorly designed interface or lacking the tools to disagree effectively, blindly follow the system's output, abdicating their own valuable judgment.

A well-designed Arbitration Interface navigates between these two pitfalls. It builds trust through transparency of reasoning, not just transparency of mechanics. It formalizes human oversight, making it a scalable, integral part of the operational process rather than an ad-hoc interruption. This is how we combat data dysfunction at the decision point. It is the methodical application of structure to the most human part of the system.

This is about building systems that are wise. Wisdom in a human-AI system is the emergent property of a well-orchestrated collaboration between statistical inference and contextual judgment. By elevating the Arbitration Interface from an afterthought to a first-class design concern, we stop building systems that simply process data and start building partners that help us make better decisions.

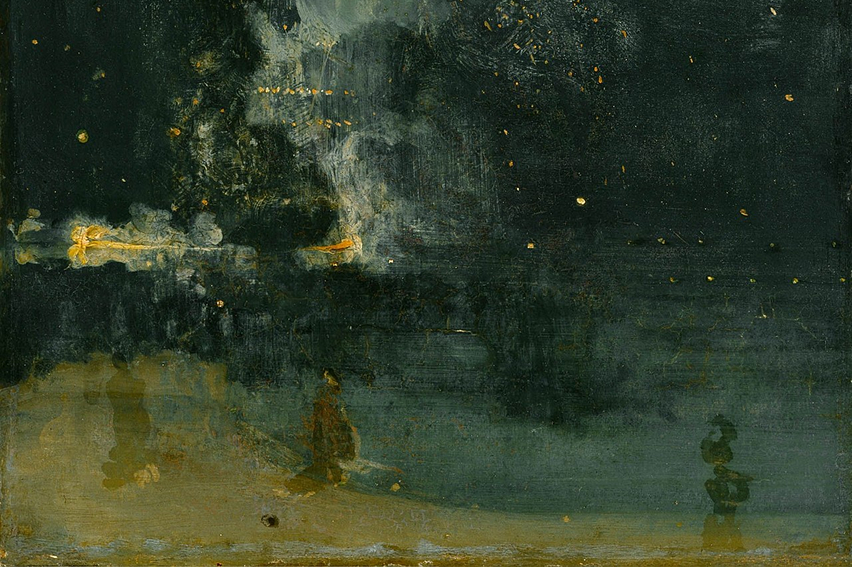

About the Art

I chose Guernica by Pablo Picasso (1937). There’s something about its fractured figures and chaotic energy that feels deeply human... the struggle to make sense of conflict. To me, it echoes the tension in every human–AI disagreement, when clarity has to be rebuilt from fragments of understanding.

Source: https://www.highresolutionart.com/2013/11/guernica-pablo-picasso-1937.html

Practical checks and monitoring signals to spot silent structural changes.

A technical breakdown of freshness, latency, drift, and pipeline desync.