Why enterprises must start treating explainability as a core architectural discipline, not a compliance checkbox.

Why enterprises must start treating explainability as a core architectural discipline, not a compliance checkbox.

December 8, 2025

•

Read time

In our race to harness artificial intelligence, we are quietly accumulating a liability that will define the next decade of enterprise software. We have become adept at measuring model accuracy and performance, but we are largely ignoring a more insidious risk. I call this "explainability debt," and it is the cousin of technical debt, but with far greater potential for strategic and ethical failure.

Technical debt describes the future cost of easy, short term code. Explainability debt describes the future cost of opaque, unquestioned models. Every time we deploy a system that makes decisions we cannot logically trace, we take on a liability. This debt does not appear in financial statements, but it grows in the dark, compounding silently until it threatens the very integrity of our data driven ambitions.

The initial accrual of explainability debt is almost always rationalized by a single metric: performance. A complex ensemble or deep learning model delivers a marginal lift in accuracy on a test set, and the business pressure to deploy is immense. We convince ourselves that we can figure out the "why" later. This is a Faustian bargain.

This focus on raw performance is a form of data dysfunction. It prioritizes a narrow, technical outcome over the broader business need for strategic clarity. A model that cannot be understood is a model that cannot be truly trusted. It becomes a black box oracle; we feed it inputs and obey its outputs without the capacity for reasoned judgment. This is not intelligence. It is automation without wisdom, and it cedes strategic control to a system whose reasoning is unknown.

The consequences of unmanaged explainability debt are not theoretical. They manifest in three critical areas that should concern every business leader.

First, it creates profound operational fragility. When a high performing model suddenly fails, and they all do eventually, your team is left blind. Debugging becomes an exercise in superstition. You cannot trace a faulty output back through a logical chain of reasoning. The model is a statistical maze with no map. The cost is not just the error itself, but the days or weeks of wasted engineering effort spent in forensic guesswork, slowing down your entire operational tempo.

Second, and more severely, it represents a fundamental compliance and ethical risk. We are entering an era of algorithmic accountability. A regulator, a journalist, or a court will ask you to defend a model's decision. "Show me why this model did not discriminate." "Explain why this patient was denied coverage." Without a clear, auditable explanation, you have no defense. Your organization is exposed. And before you know it, it has become a legal and reputational time bomb.

Finally, this debt leads to strategic paralysis. Your team becomes afraid of the very systems they built. The model becomes a legacy monolith the day it is deployed, immune to improvement because its internal logic is a mystery. Innovation stagnates. You cannot confidently refine a system you do not understand. The model that was meant to be a competitive advantage becomes an anchor, holding you back from adapting to new market realities.

Addressing this is not merely a technical fix because it requires a disciplined approach to how we build and think about our intelligent systems.

We must move beyond the false choice between complexity and clarity. The answer is not to abandon powerful models, but to build them with accountability as a first class citizen. This means architecting systems that incorporate explainability techniques like SHAP or LIME from the outset, not as a post deployment afterthought. It means having the professional judgment to know when a simpler, interpretable model is the more responsible long term choice, even if it sacrifices a fractional point of accuracy.

This is where true expertise matters. It is the difference between simply building a model and engineering a reliable, maintainable system. It requires a clear architectural vision that treats explainability with the same seriousness as scalability or security. Many organizations lack this deep data and software engineering discipline internally. They are skilled in creating models, but not in building the robust, transparent systems that must house them.

At Syntaxia, we see our role as applying this necessary discipline. Our focus is on eliminating data dysfunction, and there is no greater dysfunction than an intelligent system that cannot explain itself. We help clients establish a methodical framework for their data initiatives, one where transparency is non negotiable. This is not about slowing down innovation. It is about building AI systems that are not just powerful, but also predictable, accountable, and trustworthy.

The goal is to replace chaos with clarity. By consciously managing explainability debt, we stop building opaque oracles and start creating intelligent partners. We build systems that empower human decision makers with insight, not just output. This is the foundation for sustainable, strategic advantage in an age of AI. It is the only path to truly responsible and effective innovation.

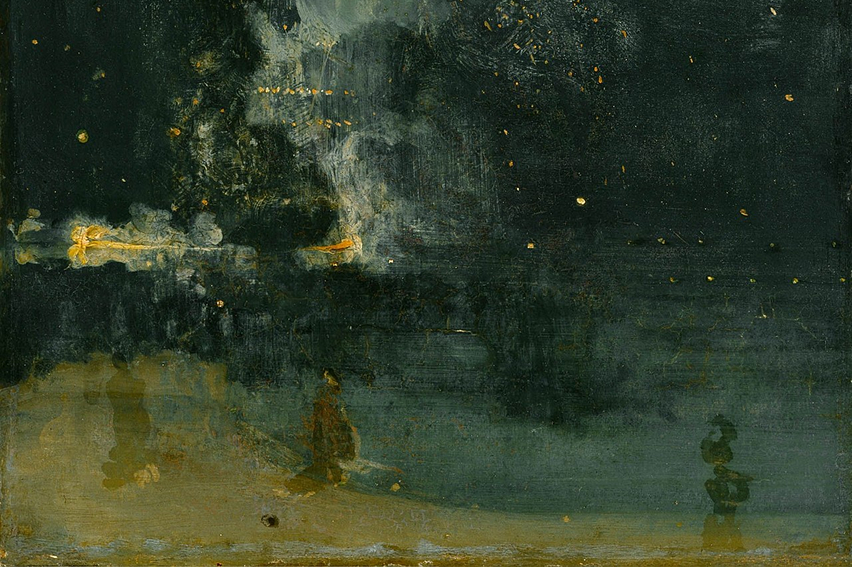

About the Art

I chose The School of Athens by Raphael (1509–1511). It’s a portrait of reason itself, a gathering of minds, each absorbed in their own search for truth. I’ve always seen it as a reminder that understanding is built through dialogue and contrast. In that sense, explainability in AI carries the same spirit: a continuous effort to make complex systems part of a shared language of meaning.

Practical checks and monitoring signals to spot silent structural changes.

A technical breakdown of freshness, latency, drift, and pipeline desync.