A guide to cleaning up system noise.

A guide to cleaning up system noise.

December 10, 2025

•

Read time

We spend our days making decisions, trusting that the information before us is a reasonable representation of reality. That trust is often misplaced. The greatest risk to your strategy is not a flawed analysis of good data, but a brilliant analysis of flawed data. The failure occurs long before the decision point, in the silent, tangled pathways we use to retrieve information. This is system noise, and it is the quiet architect of poor outcomes.

Retrieval noise quietly shifts judgment, one incorrect signal at a time. It is not a single catastrophic error, but a thousand small compromises in the pipes that feed your judgment. Clean retrieval pathways reduce confusion, tighten feedback loops, and keep teams aligned with what is actually happening, not what your systems happen to report.

To understand the cost, you must first see how a clean signal becomes corrupted. It is rarely a single point of failure. It is a chain of small, reasonable compromises that together produce an unreasonable result.

The Mirage of Completeness

The most common distortion is the presentation of a partial truth as a whole truth. A dashboard shows sales figures, but the retrieval logic silently excludes a newly acquired subsidiary because its data format is slightly different. A report on customer satisfaction pulls from the ticketing system, but ignores survey data from the marketing platform. The data is accurate within its confined scope, but the decision made from it is blind to a significant portion of the landscape. This creates confidence in a fictional reality. Leaders act on what they believe is complete knowledge, pursuing strategies that address only a fraction of the problem or opportunity.

The Deception of Accuracy

Here, the data is wrong, not just incomplete. It is a corrupted fact. This often stems from what I call "inherited assumptions," logic written years ago by someone who has since left the company, embedded in a pipeline no one fully understands. A retrieval process might deduplicate customer records based on an old rule that no longer applies, merging two distinct companies. A currency conversion uses a static table instead of a daily rate. The numbers are precise and presented with authority, but they are fiction. Decisions based on deceptive accuracy allocate real resources to phantom problems or direct teams to chase imaginary gains. The eventual discovery of the error erodes trust in all data, creating a culture of instinct over evidence.

The Velocity Illusion

Modern tools promise real time intelligence, and in pursuit of speed, we often sacrifice veracity. This is the trade off between fast data and correct data. A retrieval path optimized for low latency may bypass essential validation or consolidation steps. It delivers a signal so quickly that you can act on it immediately, but the signal is noisy, unstable. Reacting to this noise creates decision churn, a constant, costly oscillation in strategy as you respond to fluctuations that are artifacts of the system, not changes in the market. Slow, confident feedback is preferable to fast, misleading noise.

The technical consequences are measurable: wasted capital, missed opportunities, compliance risks. The human consequences are cultural and strategic, and they are more damaging in the long term.

The Erosion of Trust and Alignment

When teams operate from different versions of the truth, debate ceases to be about strategy and degenerates into arguments over data provenance. The sales team uses numbers from the CRM, marketing from the analytics platform, finance from the ERP. Each believes their data is correct. This is not mere disagreement; it is a fundamental misalignment of perception. It fractures organizational unity, as departments optimize for their own version of reality rather than a shared company objective. Trust in the organization's core intelligence falters, and decision making becomes political, rooted in influence rather than information.

The Atrophy of Judgment

Persistent noise forces a terrible adaptation. When people cannot trust the system, they stop using it, or worse, they perform their own shadow retrieval. They export data to spreadsheets, manually reconcile numbers, and build personal databases. This does not solve the problem; it atomizes it. Institutional knowledge gives way to tribal knowledge, locked on individual hard drives. The organization's capacity for informed judgment atrophies because its collective intelligence is fragmented and unmaintained. You are left with a team of data clerks, not strategists.

Fixing this is not primarily a technology purchase. It is an exercise in operational discipline. It requires treating data retrieval not as an IT function, but as a critical production line, with the same rigor applied to quality control and process engineering.

Define the Critical Few

You cannot boil the ocean. Do not start with a mandate to clean all data. That is a sure path to exhaustion and failure. Start by identifying the three to five most consequential decisions made regularly in your company. What is the information one level below the dashboard? Trace it. Follow the retrieval path for the key metric that informs your quarterly investment decision, or your primary compliance report. Map it on a whiteboard from the original source system to the screen where it is viewed. You will find the gaps, the handoffs, the undocumented assumptions. This focused investigation yields immediate, high impact clarity.

Install Circuit Breakers, Not Just Dashboards

Monitoring is passive. A circuit breaker is active. Instead of just visualizing a potentially bad number, build logic that halts a process or triggers a mandatory review when data fails basic sanity checks. If a retrieval job pulls in only ten records when it typically pulls ten thousand, it should not silently proceed. It should stop and alert. If a calculated ratio exceeds a possible threshold, it should flag for human validation before being consumed. This institutionalizes a discipline of validation, making data quality an operational checkpoint, not an afterthought.

Cultivate Curators, Not Just Custodians

Assign clear ownership of key data domains to individuals who act as curators. A custodian ensures the servers are running. A curator understands the meaning, lineage, and acceptable quality of the data. They are the arbiters of definition. Is a "customer" anyone who ever registered, or only someone who has purchased? The curator establishes this, documents it, and certifies the retrieval paths that rely on it. This is a role that blends business acumen with technical understanding. It moves responsibility from an anonymous IT department to a named, accountable expert embedded in the business logic.

Embrace Monolithic Clarity Over Fragile Complexity

There is a fetish for microservices and event driven architectures that, when poorly implemented, become perfect engines for generating retrieval noise. A simple, well understood, slightly monolithic retrieval pipeline that is meticulously maintained is superior to a "modern," distributed maze that no single person comprehends. Choose the simplest architecture that can do the job with veracity. Often, a scheduled batch process that produces a clean, vetted dataset in a central location is the most reliable foundation for decision making. Complexity is the enemy of retrieval quality.

At Syntaxia, we apply this principle of disciplined simplicity to complex data environments. We see our role as engineers of clarity. Our task is not to sell you on the latest tool, but to help you build retrieval pathways you can trust. This requires a military inspired focus on process, a deep expertise in disentangling complexity, and the cultural fluency to align your teams around a single version of the truth. The outcome is not just better data hygiene. It is the restoration of confidence in your own judgment, and the alignment of your entire organization with the reality of your business. That is the foundation upon which good decisions are finally built.

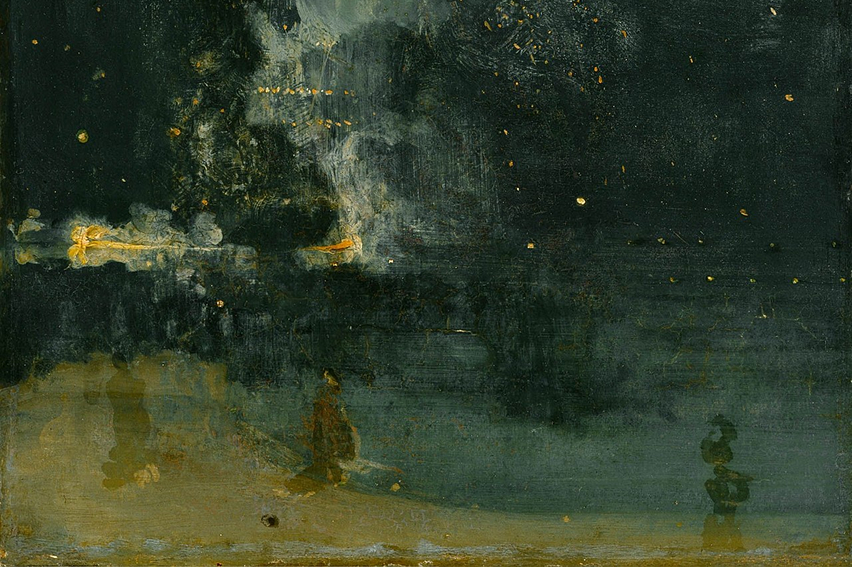

About the Art

“The Calling of Saint Matthew” (Caravaggio, 1600). I thought of this painting because the central tension in the scene comes from where the light actually falls versus where people think it’s coming from. Some figures respond to the signal; others miss it completely. That dynamic felt close to the idea of retrieval noise. Information reaches different teams with different levels of clarity, and some people act while others are left guessing. The painting holds that quiet imbalance between the intended truth and the perceived one, which is what this article is trying to surface.

Source: https://en.wikipedia.org/wiki/File:Caravaggio_%E2%80%94_The_Calling_of_Saint_Matthew.jpg#filelinks

Practical checks and monitoring signals to spot silent structural changes.

A technical breakdown of freshness, latency, drift, and pipeline desync.

Why small ingestion errors turn into downstream incidents if you don’t test them at the source.