Practical checks and monitoring signals to spot silent structural changes.

Practical checks and monitoring signals to spot silent structural changes.

December 11, 2025

•

Read time

I have watched talented data engineers become professional archaeologists. Their work is no longer about building the future, but excavating the past. They sift through layers of transformation logic, digging through lineage graphs, trying to piece together when and why their numbers began to lie. The culprit, so often, is not in their code. It is in the quiet, unannounced evolution of the data structures they were promised would remain stable. This is the insidious reality of schema drift. It does not attack your systems. It subtly rewrites the reality upon which they are built, turning your pipeline from a trusted channel into a conduit for fiction.

Our industry's prevailing sin is treating data schemas as constants in a universe that only understands change. We design intricate systems that assume the shape of today's data will be the shape of tomorrow's. This is a beautiful, logical fantasy. In the real world, production databases are living organisms. APIs mature. Business logic evolves. Each change is rational and necessary for the source system. But for your downstream pipelines, each change is a potential betrayal of contract. The goal is not to stop time. It is to build a sensory apparatus so finely tuned that you feel the first tremor of structural change long before the ground falls away beneath your feet.

To defend against drift, you must first understand its dialects. Most teams only listen for the loud, obvious declarations, missing the whispers that do the real damage.

Additive drift is the polite guest. A new nullable field appears. It seems harmless, even helpful. Yet it can destabilize pipelines using wildcard selects or fragile positional parsing. It introduces ambiguity. Is this new field meaningful? Should it be ignored? The very question creates cognitive load and hesitation.

Subtractive drift is the thief in the night. A column vanishes, or becomes a ghost column, populated only with nulls. Pipelines that depend on it do not always scream. Often, they whisper back, filling the void with default zeroes or empty strings. Your reports run without error, but they are now systematically misleading. This is the most dangerous kind of break, the one that leaves no trace in your error logs, only in the flawed decisions made from its output.

Then there are the true saboteurs: type drift and semantic drift. Type drift changes the fundamental nature of a field. An integer becomes a string. A timestamp's precision shifts. Your casting logic will either fail spectacularly or, more treacherously, succeed incorrectly, silently corrupting the value's meaning. Semantic drift is the masterstroke of deception. The column customer_tier remains a string. But the definition of "gold" has shifted from "annual spend over $10,000" to "over $15,000". Your dashboards and models, built on the old logic, now present a coherent, convincing, and utterly inaccurate reality. This drift leaves no technical signature. It can only be caught by understanding the data's soul, not just its skeleton.

Standard advice is to compare schema snapshots. This is a start, but it is the equivalent of checking your watch twice a day and calling it time management. It is a static defense against a dynamic threat. You need a living, observant nervous system embedded in your data flow.

Your first line of neurons must be contractual validation at ingestion. This is non-negotiable. Every data payload must be validated against an explicit, versioned schema contract before it is granted entry. This contract must be strict, and its enforcement must be total. A payload that violates it is not logged and tolerated. It is rejected, and an alert is treated with the urgency of a breached firewall. This establishes a clear, immovable boundary. It turns a downstream data mystery into an upstream operational ticket, which is exactly where it belongs.

But contracts can only govern what you know to define. The second, more subtle layer of your nervous system is behavioral profiling. You must measure the living pulse of your data, not just its static structure. For every field, profile each batch. Track the null percentage. Measure the cardinality of distinct values. Record the statistical distribution of numbers, the length of strings, the bounds of dates. This creates a fingerprint of normalcy. A column that has shown a null rate of 0.1% for a year suddenly jumping to 20% is not a schema change. It is a seismic event in the source system's logic. The schema registry will not notice. Your statistical profile will scream.

The third layer is the autonomous brain: automated anomaly detection on these behavioral fingerprints. Do not ask an engineer to watch a dashboard. Embed machine-driven vigilance. Set statistical control limits. Train simple models to recognize the rhythm of your data. When the pattern breaks, when the fingerprint is no longer a match, the system must raise an alert before any job fails, before any dashboard loads with bad data. This is the shift from reactive debugging to predictive maintenance.

A perfect detection system that feeds into organizational chaos is merely a better way to document your own failure. The alert fires. What then? If the response is a frantic engineer making a unilateral, untracked adjustment to a DBT model, you have solved nothing. You have just automated the first step of a new crisis.

Detection must be wired into a formal protocol for managed evolution. An alert about a potential schema drift must trigger a deliberate process. The first step is triage. Is this a breaking change, a non-breaking addition, or a behavioral anomaly? This decision dictates the action: a halted pipeline, a schema contract version update, or a conversation with the source system's owners.

This requires an in-depth cultural shift. It means the application team that owns the source database must understand that their change is not complete when it hits production. It is complete only when the downstream data contracts have been reconciled. Data must be treated as a product, and schema changes are its new, potentially breaking, releases. This introduces a necessary friction, a discipline of communication that is the hallmark of mature, scalable organizations. It replaces the chaos of surprise with the calm of coordination.

Do not attempt to boil the ocean. Begin with a targeted, ruthless focus on your single most valuable and volatile data source. Apply the full stack of discipline there: the ironclad contract, the daily profiling, the automated alerts, and the written response protocol. Do it for one source and do it perfectly. The stability and confidence you gain will be palpable. It will pay for the expansion to the second source, and the third.

This is the work we do at Syntaxia. We begin by installing this nervous system at the point of ingestion for our clients' most critical data. We apply a methodical, precision focused discipline to the problem. The result is never just a set of working alerts. It is the restoration of trust. It is the liberation of data engineers from the endless dig of forensic archaeology. It is the return of their time and talent to building the future, secure in the knowledge that the foundation beneath them will signal its movement, giving them the grace to adapt.

Schema drift is not your enemy. Your ignorance of it is. Build the system that ends that ignorance. Build the sensory apparatus that turns silent structural shifts into communicated, managed events. This is how you move from a team that fears the next break to a team that confidently orchestrates the constant, graceful evolution of its data reality.

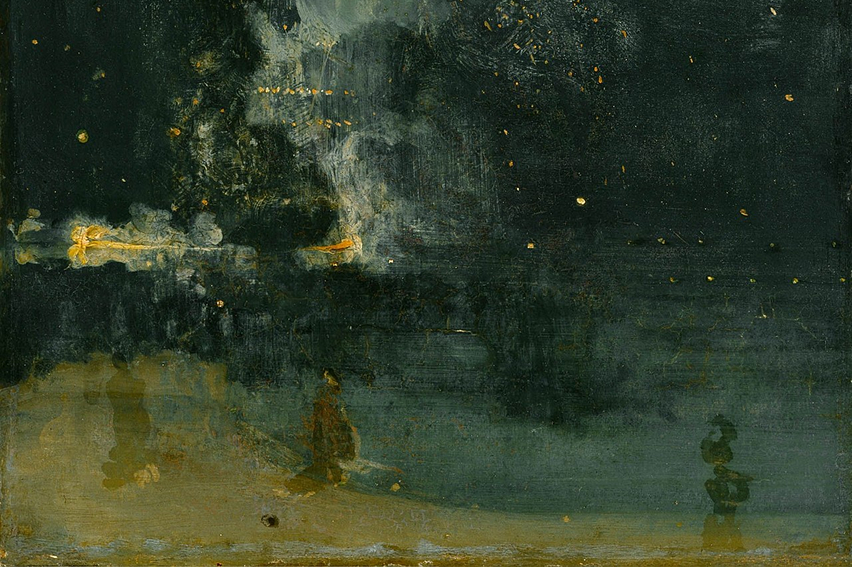

About the Art

“The Falling Rocket” (James McNeill Whistler, 1875). What drew me to this painting is the way the scene hides its own structure. The shapes soften, the boundaries blur, and you only notice the shift in form after spending time with it. That felt very close to schema drift. The meaning changes quietly before anyone realizes it, and by the time the difference is visible, the system has already moved on. I chose it because it captures that subtle moment where a familiar pattern becomes something else, without announcing the transition.

Source: By Johannes Vermeer - www.mauritshuis.nl : Home : Info : : Image, Public Domain, https://commons.wikimedia.org/w/index.php?curid=50398

A technical breakdown of freshness, latency, drift, and pipeline desync.

Why small ingestion errors turn into downstream incidents if you don’t test them at the source.