Practical modeling patterns that keep pipelines simple, stable, and predictable.

Practical modeling patterns that keep pipelines simple, stable, and predictable.

December 16, 2025

•

Read time

Technical debt in analytics rarely begins where teams expect it to. It does not usually appear when data volume explodes or tooling reaches its limits. It begins much earlier, at the moment someone decides what a table represents and how it should be understood.

Those early decisions often feel temporary. Teams move quickly, assumptions feel obvious, and definitions remain unwritten. What feels obvious in the moment slowly becomes invisible context. Over time, that invisible context hardens into structure.

By the time symptoms surface, dashboards disagree, pipelines resist change, and every adjustment feels risky. At that stage, the problem is no longer technical. It is architectural.

Data modeling is where intent either becomes durable or quietly decays.

There is a comforting belief that analytics technical debt is the price of growth. More users, more tools, more complexity. The belief persists because it explains pain without questioning early judgment.

In practice, scale only exposes weaknesses that were already present. Debt accumulates when models fail to communicate intent clearly enough to survive handoffs, time, and change. A small warehouse with ambiguous semantics becomes harder to evolve than a large one with disciplined structure. Queries still run. Numbers still appear. Confidence quietly erodes.

Analytics modeling exists to make meaning explicit. When it does not, every downstream improvement inherits uncertainty.

When intent is unclear, teams tend to reach for performance fixes. Faster queries. Lower costs. Better utilization. These improvements matter, but they operate on the wrong layer.

A model that performs well but requires explanation every time it is queried has already failed its primary function. Analytics is meant to reduce cognitive effort, not shift it onto the user. This is why modeling must prioritize meaning before efficiency. Clear concepts make performance work safer. Ambiguous concepts turn optimization into a way of freezing confusion in place. Once meaning is fixed, efficiency becomes a refinement rather than a gamble.

Meaning collapses fastest when grain is unclear. Every analytics table answers an implicit question about what one row represents. When that answer remains unstated, inconsistency follows.

Mixed-grain tables behave unpredictably. Filters distort results. Aggregations feel correct until they are not. Debugging becomes interpretation rather than diagnosis. Declaring grain forces a commitment to reality. One row represents one thing, at one level, for one purpose. Everything else must align with that choice. This single decision eliminates a large class of errors and creates a foundation that naming and metrics can build on reliably.

Once grain is clear, meaning still has to survive time, turnover, and reuse. That survival depends almost entirely on naming.

Names carry assumptions about scope, timing, and intent. Weak names outsource those assumptions to tribal knowledge. Strong names encode them directly into the model. This becomes critical with metrics. A field named revenue may look harmless, but it silently invites disagreement. Gross or net. Recognized when. Adjusted how.

Explicit naming feels heavy early. Over time, it prevents redefinition, duplication, and quiet drift. Naming becomes a form of architectural memory.

Even with clear grain and naming, systems degrade when raw data and interpreted meaning are combined. Raw data exists to preserve traceability to source systems. Analytical models exist to express how the business chooses to understand that data. When these roles blur, change becomes dangerous. Upstream shifts ripple unexpectedly. Logic migrates into dashboards. Trust erodes because no single layer owns meaning.

Separating raw ingestion from analytical truth gives the system a place to absorb change. Sources evolve. Definitions remain stable. When questions arise, answers can be traced deliberately. Predictability emerges from this separation.

Clear layers reduce ambiguity, but they do not prevent metric drift on their own. Drift happens when metrics are defined locally instead of centrally. Two dashboards report the same concept with slightly different logic. Both appear reasonable. Neither triggers alarms. Confidence fades slowly.

Centralizing metric definitions inside the data model forces clarity and negotiation early. It turns hidden disagreement into explicit choice. Metrics treated as shared infrastructure age better than metrics treated as convenient calculations.

All of these modeling decisions exist for one reason. Change.

Businesses evolve. Definitions shift. New questions emerge. Systems that embed too much logic too early become fragile under that pressure. Resilient models isolate volatility. They give change a place to land without destabilizing everything else. Over time, this creates calm systems that evolve without drama. That calm is the opposite of technical debt.

Analytics technical debt does not originate in tooling or scale. It originates in modeling decisions that fail to encode intent clearly enough to survive time and change. Clear grain, disciplined naming, layered truth, and shared metrics are not best practices for their own sake. They are mechanisms for preserving meaning under pressure.

A well modeled analytics platform feels predictable. Changes land cleanly. Numbers invite trust. Decisions move faster because fewer assumptions need to be unpacked. That outcome is not accidental. It is the cumulative result of early judgment, made visible.

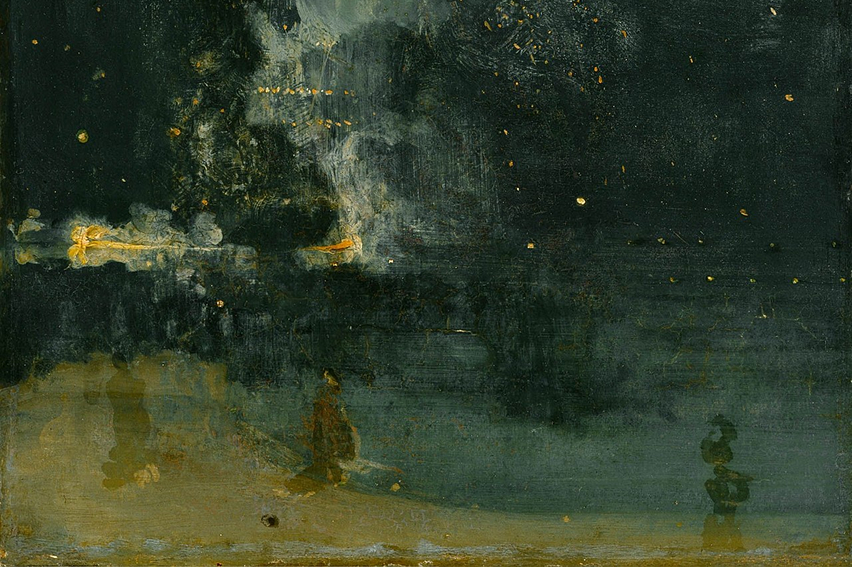

About the Art

Las Hilanderas (The Spinners) (1657) is a painting about what happens before the final image exists. The foreground is all process, preparation, and material work. The finished tapestry only appears later, in the background. That mirrors analytics modeling closely. The visible outcomes, dashboards, metrics, decisions, depend entirely on the early, often invisible choices about structure and intent. When that foundational work is rushed or left implicit, the final picture may exist, but it won’t hold meaning for long.

Credits: https://commons.wikimedia.org/wiki/File:Velazquez-las_hilanderas.jpg#/media/File:Velazquez-las_hilanderas.jpg

Engineering a reliable link between data spend, platform behavior, and business value.

Practical checks and monitoring signals to spot silent structural changes.