Engineering a reliable link between data spend, platform behavior, and business value.

Engineering a reliable link between data spend, platform behavior, and business value.

December 16, 2025

•

Read time

We have entered an era of data saturation. Tools abound that promise visibility; dashboards proliferate, tracing every query and logging every credit consumed. This is the age of observability. Yet for many leaders, a profound frustration remains. Seeing everything is not the same as understanding anything. True power lies not in observation, but in prediction. The strategic leap forward is the transformation of a reactive data platform into a predictable financial asset. This is the journey from observability to CFO-aligned predictability.

Most organizations believe they have a handle on their data spend because they can see it. They receive the monthly Snowflake invoice, they have charts showing warehouse utilization, they might even have alerts for long-running queries. This is a seductive illusion.

Observability, without a rigorous framework for interpretation, creates a form of sophisticated helplessness. It amplifies noise. Teams are bombarded with symptoms -a spike here, a timeout there- and descend into reactive firefighting. They tune a query today only to face a new, equally expensive one tomorrow. The platform becomes a black box that outputs both dashboards and shocking invoices, with no clear connective tissue between the two.

The cost is measured in more than credits. It is measured in the erosion of trust. When engineering teams are perpetually on the defensive, explaining cost overruns, and business leaders cannot rely on stable performance or predictable budgets, data initiatives stall. The platform becomes a liability to be managed, not an engine for growth.

To move beyond this cycle, we must reject superficial explanations. A high credit bill is not a cause; it is the final outcome of a series of interconnected systemic failures. Strong visibility, of the kind that leads to predictability, requires us to dissect these systems with discipline.

The first domain is architectural entropy. Over time, without a governing vision, data platforms accumulate sprawl. Redundant pipelines are built because the existing ones are opaque or fragile. Data is materialized in multiple places under different names, leading to not just storage bloat but massive, hidden compute costs as downstream processes compete to transform the same raw data. Inefficient data models, often born from urgent tactical needs, become permanent fixtures, forcing every subsequent query to perform unnecessary joins and scans across terabytes.

The second domain is workflow negligence. Warehouses are left running on auto-suspend settings that are financially illiterate. User consumption is entirely ungoverned, with analysts executing exploratory queries on multi-cluster XL warehouses as a default. Scheduling pipelines overlap and conflict, creating resource contention that drives up costs while slowing everything down. This is not a failure of tools, but of operational discipline.

The third, and most critical, domain is the separation of action from value. This is where technical metrics divorce from business outcomes. A query may be perfectly optimized from a technical standpoint yet serve no discernible business purpose. An entire pipeline may run flawlessly every night, producing a dataset that no dashboard or decision uses. Observability shows you that the pipeline is healthy; predictability demands you ask why it exists.

This is why our approach centers on the forty-eight hour Deep Scan Audit. The time constraint is intentional. It is not a superficial glance. It is a wartime assessment, a concentrated burst of forensic analysis designed to cut through the noise and isolate the core vectors of waste and value.

We begin by mapping the ecosystem not as a collection of objects, but as a flow of value and cost. We trace a business question from its origin in a report back through the labyrinth of views, tables, pipelines, and warehouses that conspire to answer it. This value stream mapping reveals the absurdities: the seventeen-hop transformation to produce a single KPI, the nightly full refresh of a terabyte table where incremental load would suffice, the analytic workload running on a warehouse sized for bulk loading.

Our scan then targets the engine room: the query optimizer's logs. Here, we look for patterns, not outliers. We seek the repeating, expensive scan patterns that point to missing clustering keys. We identify the automatic reclustering jobs that consume more than they save. We decode the user behavior, segmenting predictable, production workloads from unpredictable, ad-hoc exploration and right-sizing the infrastructure for each.

The deliverable is a commander’s brief. It is a direct, unambiguous document that states: here are your three primary sources of credit leakage, here is the precise technical root cause of each, here is the estimated monthly savings for remediation, and here is the sequence of actions to execute. It moves immediately from diagnosis to prescription. This is predictability in action: a clear line of sight from a technical adjustment to a financial outcome on the next invoice.

Ultimately, achieving predictability is not a tooling problem. It is a cultural and operational discipline. It requires installing a mindset where every data asset has a known cost, and every cost is traced to a business outcome.

This is where our military-inspired discipline finds its purpose. It is about creating a system of clear accountability and standard procedures. It is about designing architectures with cost as a first-class constraint, not an afterthought. It is about treating the data platform as a finite, precious resource to be deployed with strategic intent, not an infinite cloud to be sprayed with queries.

For the leader who is tired of surprises, who needs their data platform to be a reliable, predictable pillar of their operations, the path forward is clear. You must demand more than observability. You must insist on a predictable, explainable relationship between your investment and your insight. You must move from watching the credits burn to controlling the flame.

Stop managing symptoms. Start engineering predictability. Let us perform the Deep Scan, expose the true sources of drag, and build a data platform that performs with the precision and predictability your strategy requires.

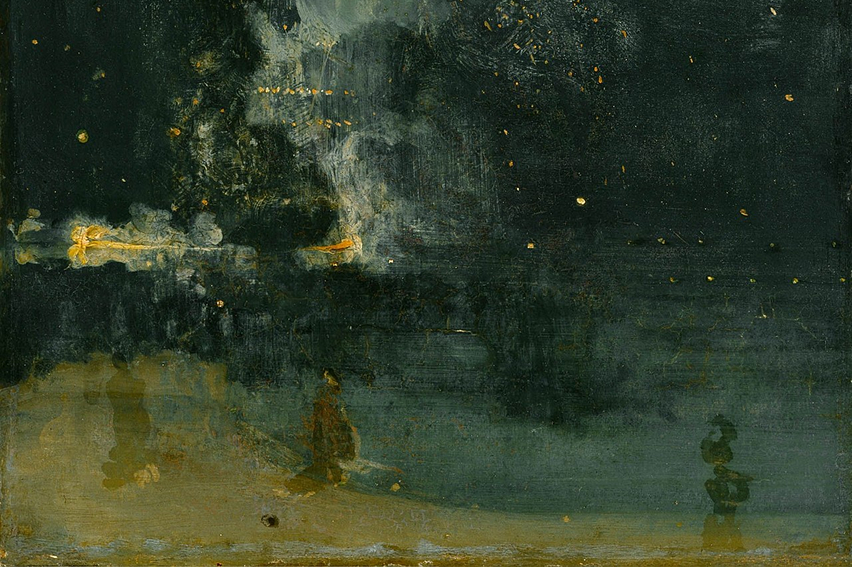

About the Art

Joseph Mallord William Turner's Rain, Steam and Speed (1844) is a painting about motion, force, and consequences. You can feel speed and power, yet the scene is noisy, blurred, and hard to read. That’s how many teams experience modern data spend: the platform is doing a lot, quickly, and the invoice arrives with more certainty than the explanation. The shift from observability to predictability is the work of turning that blur into something legible enough to steer.

Credits: J. M. W. Turner, Public domain, via Wikimedia Commons

Practical modeling patterns that keep pipelines simple, stable, and predictable.

Practical checks and monitoring signals to spot silent structural changes.