Field-level expectations and rules that make pipelines more reliable.

Field-level expectations and rules that make pipelines more reliable.

December 18, 2025

•

Read time

Most data pipeline failures are not dramatic server crashes or network outages. They are quiet, insidious breakdowns of trust. A column that contained integers yesterday contains text today. A field defining "customer lifetime value" subtly shifts its calculation logic without announcement. An API endpoint silently deprecates a crucial nested object. These are not technical failures in the traditional sense. They are social and procedural failures manifested in code. They represent a fundamental mismatch between what one team promises and what another team expects.

The cost is measured in wasted engineering hours spent on forensic debugging, in eroded confidence in dashboards and decisions, and in the paralyzing fear of changing anything because the downstream impact is unknowable. This is the data dysfunction that strangles momentum. A data contract is the antidote. It is not merely a schema. It is a formal declaration of behavior, a pact that transforms data from a volatile byproduct of systems into a stable product with clear obligations.

Treating data as a product is the essential mindset shift. When a team builds an application programming interface, they understand they are serving consumers. They craft documentation, version their endpoints, and manage breaking changes with care. Yet, the data streams those applications produce often lack the same discipline. They become implicit, undocumented byproducts.

A data contract makes the product nature of a dataset explicit. It answers the critical questions any consumer has: What can I expect from this data? How can I rely on it? What happens when it needs to change? A schema is the skeleton of this product. The contract is its full specification, including service level agreements, warranty, and the terms of its evolution. It moves the conversation from passive observation of what exists to active agreement on what must be guaranteed.

To be more than a document gathering digital dust, a contract must be precise, machine-readable, and integrated into the development lifecycle. Its strength comes from specificity in several key dimensions.

Field-Level Semantics Define Truth

The data type string is woefully inadequate. A contract must define what the string means and what rules govern it. For a product_sku field, the contract should specify the exact regular expression pattern it must adhere to. For an order_status field, it must enumerate the allowed values: "PLACED," "FULFILLED," "CANCELLED," and nothing else. For a temperature_celsius field, it must declare the valid range. This turns vague understanding into executable validation. Your pipeline can now reject a record where order_status is "SHIPPED" at the point of ingestion, forcing a conversation about whether this is a new valid state or an error. This is where ambiguity dies.

The Explicit Mandate of Nulls and Absence

The question of nullability is rarely about technology. It is about business logic. Allowing a null in a field is a statement. It says, "For this record, this information may be legitimately absent or unknown." A contract must force this decision into the open. It should state not just if a field can be null, but under what business conditions. Furthermore, it should set measurable expectations for data quality. A field like customer_email might be nullable, but the contract could include a service level objective: "This field shall be populated for no less than ninety-five percent of records in any daily batch." This transforms quality from a vague hope into a verifiable condition of the agreement.

The Golden Rule: Backward Compatibility as a Social Contract

This is the core tenet that prevents breakages. A robust data contract enforces a policy of backward compatibility for all non-negotiated changes. In practice, this means the rules of safe evolution are codified. You may add new optional fields. You may relax constraints, such as making a non-nullable field nullable. These are safe operations. What you may not do unilaterally is perform a breaking change: deleting a field, changing its fundamental data type, or making a nullable field non-nullable.

This policy is not a technical limitation. It is a social agreement that respects the consumer. It states that the producer bears the burden of stability. When a breaking change is necessary, it triggers the change management process, not a pipeline failure. This single rule eliminates the vast majority of "mysterious" breakages by making impossible the most common careless actions that cause them.

Governed Change Management: The Procedure for Evolution

A contract that cannot be changed is useless. A contract that can be changed casually is equally useless. Therefore, the process for change is as important as the contract itself. This is where discipline separates the professional from the amateur.

A change to a data contract should follow a formalized flow. A producer seeking to make a breaking change must first propose it, often via a pull request or change ticket. This proposal should include impact analysis, a migration path for consumers, and a deprecation timeline. For example, "We propose deprecating the field legacy_id. A new field standardized_id is now available. The legacy_id field will be maintained for ninety days, after which it will be removed from the contract and the data stream." This proposal is reviewed, often by a cross-team data governance group or directly by affected consumers. Only upon approval is the change sequenced into the pipeline. This process replaces surprise with predictability.

The mechanics matter. A contract defined in a wiki is a suggestion. A contract defined in code and integrated into CI/CD is a law.

Start by writing contracts in a structured, machine-readable format like JSON Schema or Protobuf. These formats allow for rich validation rules. Store these contract definitions in a version-controlled repository alongside the code that produces the data. Use a schema registry or a custom validation service to act as a gatekeeper. Every data payload, whether from a streaming source or a batch job, must be validated against its contract version before being admitted to the lake or warehouse. The pipeline fails fast, at the moment of violation, with a clear error pointing to the broken clause.

This automated enforcement is critical. It moves the validation left, to the earliest possible moment, and removes human gatekeeping from routine checks. It ensures the contract is not an ideal to aspire to but a living, enforced reality of the system.

The ultimate goal of implementing data contracts is not to create paperwork or process for its own sake. It is to unlock velocity. It seems counterintuitive. More rules, more validation, more process surely must slow things down.

The opposite is true. Without contracts, every change is fraught with hidden risk. Teams move slowly because they fear the unknown downstream impact. They duplicate data pipelines to create "safe" copies. They avoid necessary refactoring. This is the true cost of chaos.

With strong, enforced data contracts, teams gain a bounded zone of safety. Producers understand their obligations and can innovate within them. Consumers can build with confidence, knowing the foundation will not crumble unexpectedly. The time once spent on debugging and reconciliation is redirected to building new features and deriving new insights. The pipeline stops being a fragile chain of whispers and becomes a reliable conduit of structured truth.

This is how you eliminate data dysfunction. Not with a grand, singular technological solution, but with the methodical, disciplined application of good engineering practice to the data product lifecycle. You replace uncertainty with clarity, and in that clarity, you find the stability required for genuine strategic growth.

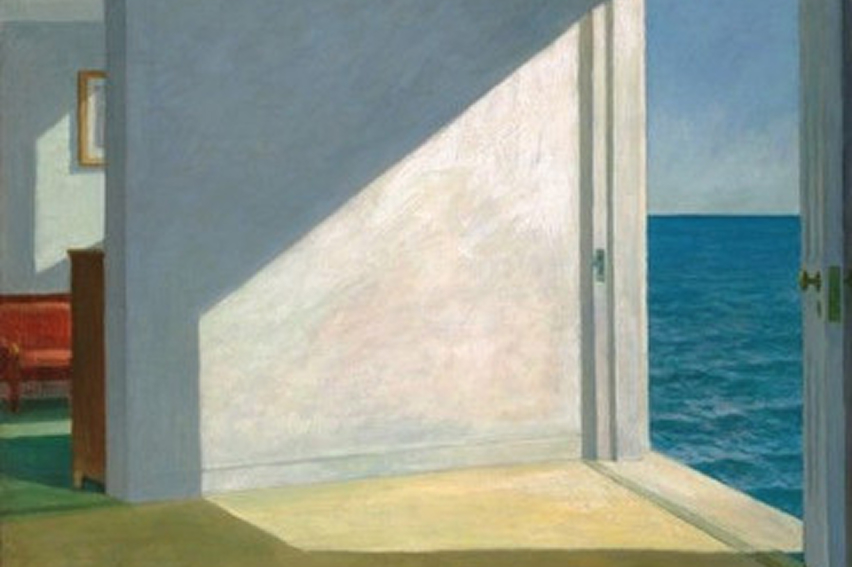

About the Art

We picked Edward Hopper Rooms by the Sea (1951) because of the way spaces are separated. Each room is clear. Each opening leads somewhere specific. The transition between inside and outside is unmistakable. That sense of separation aligns with the role of data contracts. They define where responsibility begins and ends. When those edges are clear, movement becomes safe. When they aren’t, everything feels exposed.

Credits: https://artgallery.yale.edu/collections/objects/52939

Practical modeling patterns that keep pipelines simple, stable, and predictable.

Engineering a reliable link between data spend, platform behavior, and business value.

Practical checks and monitoring signals to spot silent structural changes.